→ 30% to 60% of crypto ecosystem activities are in closed-source repos, challenging measurement efforts.

→ Despite its flaws, the Developer Report is a key benchmark for ecosystem health and often serves as marketing material for top performers.

→ Foundations are evolving beyond traditional metrics to better understand and support their communities, but most still lack precise data.

→ All ecosystems face difficulties with reliable data for their dev marketing initiative results.

Introduction

First, I want to start by thanking Henri Lieutaud, Head of Developer Relations at the Starknet Foundation; Andrea Baglioni, Head of Grants at the Solana Foundation; Claire Kart, CMO at Aztec; Nicolas Consigny, Devrel at the Ethereum Foundation; Neil, Head of Ecosystem at Aptos Labs; Nader Dabit, Director of Developer Relations at EigenLayer; Sylve Chevet, Co-Founder at Hylé and Vlad Frolov from NEAR, who generously shared their insights with me.

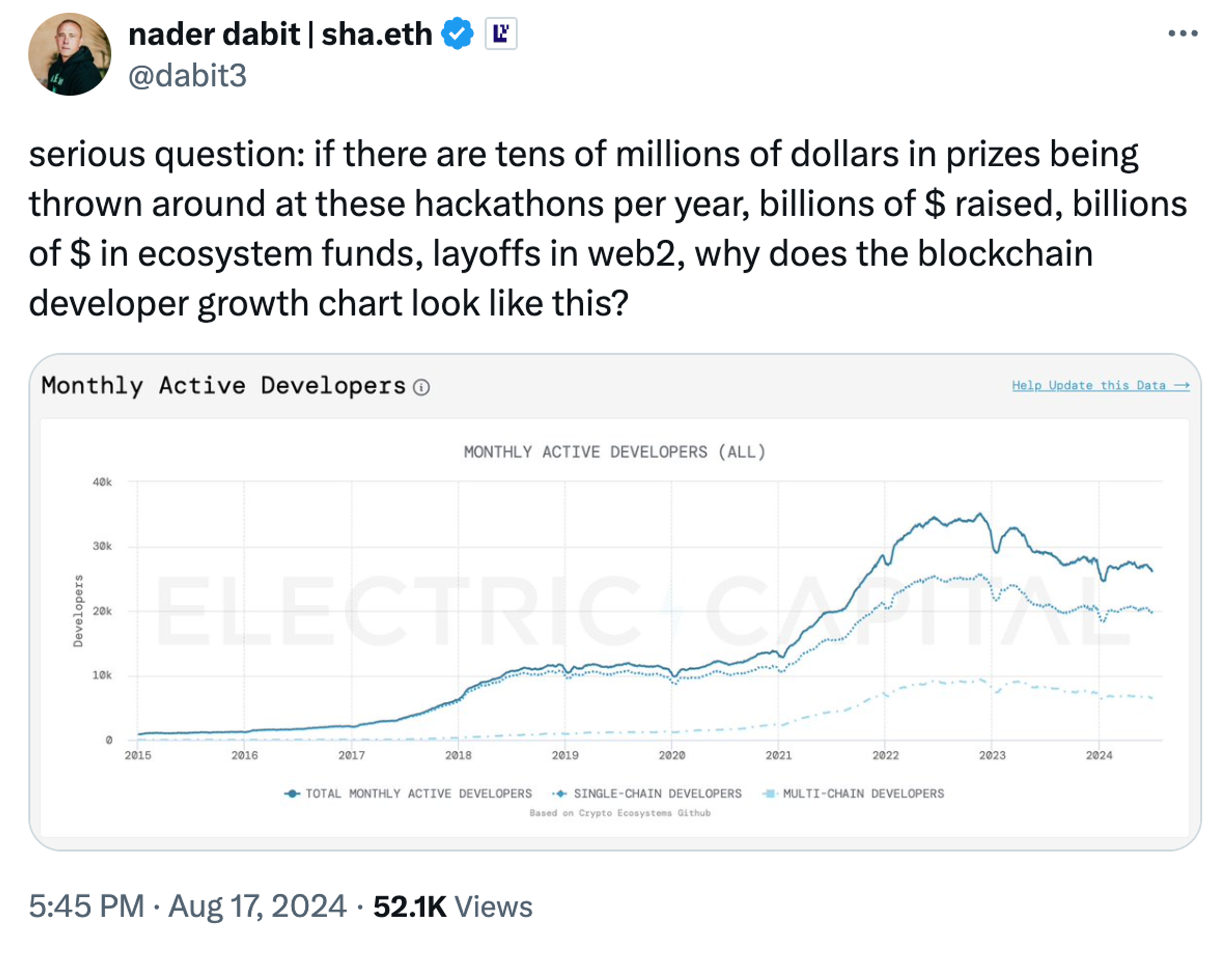

With the Electric Capital Developer Report's July 2024 update, we have fresh data to consider. This prompts a key question: Are we fully capturing the health of crypto ecosystems? While the report offers detailed metrics on developer activity, there might be more to the story.

This article aims to dive deep into crypto ecosystems' strategies to assess their health. It challenges the reliance on the Dev Report metrics and explores how foundations adapt to better understand and nurture their communities.

Why the Dev Report is Not Perfect

In my conversations with crypto foundations, it became clear that while the Dev Report provides essential data, it's not without its gaps. Here is some of the feedback I've gathered.

The most common one was related to the lack of visibility. “Significant portions of the NEAR ecosystem, including two of the top three dApps by unique active wallets, are closed-source, which the report completely misses” Vlad noted. This isn't just a minor oversight, it's indicative of a broader issue where the quality and breadth of contributions aren't fully captured.

I gathered a similar insight from Andrea at the Solana Foundation who shared that “the report doesn't capture the full scope of Solana’s activities since more than 50% of our repositories are closed-source”. Neil from Aptos mentioned that most of their ecosystem’s repos are also closed-sourced. These insights suggest that while the report is useful for high-level comparisons across ecosystems, it does not fully capture the nuances of happening on the ground. A solution could involve directly consulting with foundations for estimates, but this would increase the workload for Electric Capital report’s contributors and potentially introduce subjectivity.

Meanwhile, at the Ethereum Foundation, the Dev Report is seen more as a general health check, not something you'd use to make precise decisions. It's not considered a key performance indicator, highlighting a cautious approach to how they interpret these metrics.

And then there's Starknet, which emphasizes the importance of qualitative over quantitative measures, especially in less mature ecosystems. For them, the true pulse of the ecosystem comes from regular, in-depth conversations with developers, not just cold, hard data. Starknet also customizes the methodology of the Dev Report for their own use, incorporating more frequent updates and tailored analyses to better serve their community's needs using tools like OnlyDust.

Perfect is the Enemy of Good

Despite recognizing its limitations, there's a consensus that the Dev Report is useful. It might not be perfect, but it provides a foundation upon which ecosystems can benchmark themselves and gauge relative growth.

Starknet's strategic approach to the Developer Report exemplifies its utility. Henri from Starknet explained, “We know it's watched, so we ensure our repositories are well-represented in it, allowing us to capitalize on our work when it's published.” They use the report not only to enhance their visibility but also as a benchmark to compare themselves to others in the industry. This comparison is vital for understanding where they stand and identifying areas for improvement. When the report is released, Starknet leverages the positive results as a powerful communication tool, enhancing their image and attracting more developers.

Aztec, on the other hand, finds particular value in understanding how market cycles affect developer dynamics. This insight helps them strategically time their marketing efforts, ensuring they're nurturing and retaining talent effectively during key periods.

In embracing the report, Aztec, Starknet and others like Aptos acknowledge its role not just as a metric but as a part of their strategic communications and marketing toolkit. While it might not capture every nuance of every ecosystem, it offers a snapshot that, when combined with other tools and insights, helps paint a more comprehensive picture.

Strategy to Better Measure Ecosystems Health

It's clear from these discussions across various Foundations that no single tool or report can capture the full spectrum of activity. Each ecosystem has crafted a unique approach to overcome the limitations of standard metrics like those presented in the Dev Report.

NEAR focuses on trends and community engagement, gauging ecosystem vitality through interactions that signify active involvement rather than absolute numbers. Ethereum's teams employ an array of tools tailored to the diverse needs of their projects, ensuring flexibility and precision in their measurements. Starknet emphasizes the importance of starting with what's readily measurable, such as GitHub activities, to gain initial insights. Like all OnlyDust clients, they also receive precise data on monthly onboarded developers, retention rates, and developer acquisition costs, allowing them to refine their approach with broader benchmarks for a well-rounded analysis.

As our industry evolves towards more complex and varied structures, continually rethinking our strategies to measure ecosystem health will become even more crucial. Soon, we might see analyses based on programming languages or tech stacks. “As chains become more abstract and modular, we're not just talking about ecosystems in the usual sense,” Sylve noted. “For example, the report misses out on how Base is built on Opstack, illustrating a clear connection. With the rise of appchains, sovereign rollups, and provable applications, pinpointing where developers actually are will get a lot trickier.”

I'm currently working on my next article focusing on grant program ROI and dev acquisition costs. If you'd like to contribute to the discussion or chat about benchmarks, please contact me on Telegram @alex_n1n.